By Lee Chalmers, University of Edinburgh

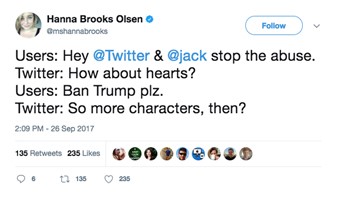

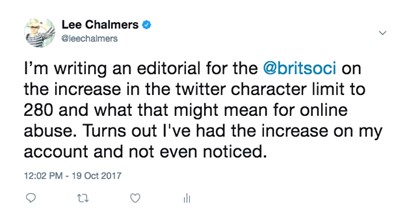

The social media platform Twitter recently announced that it was changing the default 140 character limit to a doubled 280 limit, to be rolled out initially to some randomly selected users. An unexpected move, the company rationale is that it is ‘giving you more characters to express yourself’ (Bhargava, 2017) but we might wonder, given that twitter has a well documented problem with online abuse, what is the point of this increase? Doesn’t this extension simply give twitter trolls a bigger platform?

There is a growing body of literature on the nature and form of twitter trolling which points out the often gendered and raced nature of the abuse. In studying computer-mediated communication Herring argues that women experience more harassment online than men and that men are disproportionally the perpetrators of online vitriol (Herring 1996, 1999). Hardaker & McGlashan (2016:92) note in the conclusion to their study of the linguistics of trolling that “women were predominantly the target of these threats (both literally and grammatically)”. A study by the Guardian newspaper into “70 million comments left on its site since 2006 discovered that of the 10 most abused writers eight are women, and the two men are black.” (Gardiner et al, 2016). It is strongly argued in the literature that the online vitriol women suffer is sexually violent (Citron, 2011; Cole, 2015; Franks, 2011; Jane, 2012, 2014, 2015, 2016) consisting of rape threats, death threats, comments on women's bodies, their sexual appeal, with a heavy focus on a desire to silence women through violence and sexual violence. A recent Amnesty International study (Dhrodia 2017) tracking tweets sent to female MP’s found that “twitter is a toxic place for women”. Why did Twitter choose to increase the number of words with which trolls could do this rather than taking more steps to reduce the abuse sent to women?

In February and March 2017 Twitter made changes to its platform aimed at reducing users experiences of abuse. This included updating how users can report abusive tweets, stopped the creation of new abusive accounts, implemented safer search results and to reduce the notifications users receive when someone they have already muted starts a conversation. In my PhD research with feminist women who have experienced online abuse, many referred to these changes positively, stating how they now regularly mute or block people who send them abusive messages. They (broadly) welcome the changes but many would like to see Twitter do more to stem the flood of abuse on the platform, a desire that seems to be at odds with a culture of freedom of speech that downplays the effects of such abuse on citizens, particularly women.

Why did Twitter decide to up the character limit? According to their blog the intention was to recognise that different languages need more space to express the same ideas.

The issue with online abuse, however, is not necessarily the length of message that the senders can pen. It’s often the volume received, the flood of messages. At one point feminist campaigner Caroline Criado-Perez was receiving 50 abusive tweets a minute.* To what extent does it matter that someone is threatening to rape and kill you in 140 characters or 280? If the comments from my research participants are anything to go by, the extra characters will not make a substantial difference. What’s disappointing is that Twitter seem to have time to spend looking for ways to change the platform to encourage expression, whilst seeming to be limited in finding ways to block misogynists, racists and white supremacists. The technology could be found to identify certain key words, like ‘nazi’ or ‘rape’ and ‘ni**er’. Trolls gather round hashtags such as #feminism and the algorithms could be set to identify accounts that use these hashtags to harass and abuse women. What seems to be missing is the will to take a position on what constitutes hate crime and then design limitations accordingly. In this light, the Twitter character increase is just not that exciting.

Bhargava, A., 2017. Twitter increases character limit to 280, internet erupts into flames. Tech Crunch. Available at: http://mashable.com/2017/09/26/twitter-character-limit-280-reactions/#Oqud1FKQEOqn [Accessed October 19, 2017].

Dhrodia, A., 2017. We tracked 25,688 abusive tweets sent to women MPs – half were directed at Diane Abbott. New Statesman. Available at: https://www.newstatesman.com/2017/09/we-tracked-25688-abusive-tweets-sent-women-mps-half-were-directed-diane-abbott [Accessed October 19, 2017].

Perez, S., 2017. Twitter adds more anti-abuse measures focused on banning accounts, silencing bullying | TechCrunch. Tech Crunch. Available at: https://techcrunch.com/2017/03/01/twitter-adds-more-anti-abuse-measures-focused-on-banning-accounts-silencing-bullying/ [Accessed October 19, 2017].

Cole, K.K., 2015. “It’s Like She’s Eager to be Verbally Abused”: Twitter, Trolls, and (En)Gendering Disciplinary Rhetoric. Feminist Media Studies, 15(2), pp.356–358.

Hardaker, C. & McGlashan, M., 2016. “Real men don’t hate women”: Twitter rape threats and group identity. Journal of Pragmatics, 91, pp.80–93.

Jane, E.A., 2016. Online misogyny and feminist digilantism. Continuum, 4312(August), pp.1–14.

Gardiner, B. et al., 2016. The dark side of Guardian comments. The Guardian.

Jane, E.A., 2015. Flaming? What flaming? The pitfalls and potentials of researching online hostility. Ethics Inf Technol (2015), pp.67–81.

Citron, D.K., 2011. “Misogynisitc Cyber Hate Speech” Written testimony of Danielle Keats Citron to UK Houses of Parliament,

Jane, E.A., 2014. “Back to the kitchen, cunt”: speaking the unspeakable about online misogyny. Continuum, 28(4), pp.558–570.

Franks, M., 2011. Unwilling Avatars: Idealism and Discrimination in Cyberspace. Columbia Journal of Gender and Law, 1(2), pp.224–261.

Herring, S.C., 1999. The Rhetorical Dynamics of Gender Harassment On-Line. The Information Society, 15(1987), pp.151–167.

Herring, S.C., 1996. Posting in a different voice. In C. Ess, ed. Philosophical Perspectives on Computer-Mediated Communication. Albany: State University of New York Press, pp. 241–265.

Jane, E., 2012. “Your a Ugly, Whorish, Slut.” Feminist Media Studies, pp.1–16.

*Personal communication.